My Research Interests

My Research Interests

Swiss National Science Foundation Project 2100-57176.99

Collaborators: Dr. Mirela Borici, dipl. ing. Damir Pasalic.

-

The aim of our work is to develop a device simulator (especially

for the High Electron Mobility Transistor HEMT) which can be

coupled to a full-wave simulation model based on the finite difference

time-domain method (FDTD). The large experience of our institute with

computational electromagnetics will be a big advantage when the

HEMT simulator has to be coupled to the FDTD field solver.

As described in the research plan of our project, our group

had to enter the new field of numerical semiconductor device modelling.

In the first year of our project, we gained experience

with the numerical simulation

of the hydrodynamic model (HDM). This model allows a more accurate

description of electron transport than the conventional drift diffusion

model or energy balance models, which are used in commercial semiconductor

device simulators. In contrast to the drift diffusion model,

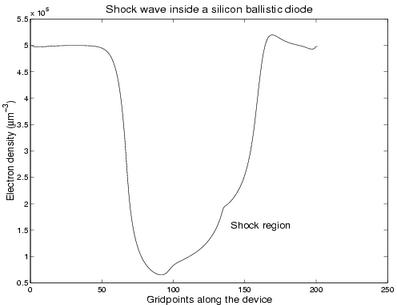

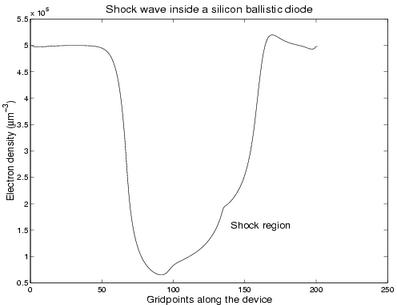

the HDM predicts shock waves in the electron gas. This

necessitates the use of shock capturing algorithms (i.e. upwind methods).

You can download here a simple but instructive

MATLAB program,

which simulates the electron density, electron velocity,

current (and temperature etc etc) inside a ballistic

diode (i.e. an n+ - n -n+ doped piece of silicon) within a full

hydrodynamic model.

The units in the program are scaled: E.g., the mass unit is 10^-7 kg,

lenghts are measured in micrometers (see the header of the program).

The thickness of the diode is 0.3 mu and it is split into three equally

thick layers of 0.1 mu. The contact layers are highly doped

(5*10^23 donors/cm^3), the middle layer has a donor concentration of

2*10^21 cm^-3. The applied bias is rising from 0 to 1 volt during

the simulation. Finally, a hydrodynamic shock develops, visible in

the upper curve at grid point 80.

The MATLAB file: hydro.m.

The initialization file: initializer.mat.

Details can be found in this paper.

Furthermore, we developed a Monte Carlo

program which allowed us to obtain the energy-dependent transport parameters

in the HDM for GaAs- or InP-like materials,

following mainly the lines given in the famous textbook of

K. Tomizawa. In this program,

all important scattering mechanisms are taken into account:

Impurity scattering, acoustic phonon scattering, polar and non-polar

optical phonon scattering. Also parameters which describe the

nonparabolicity of the Gamma- and L-bands are taken into

account. As a result, we obtained impulse and energy relaxation

times as a function of the mean electron energy as well as the

energy-dependent electron mass. These results were aproximated by

polynomial fitting functions which made it possible to use them

for hydrodynamic simulations.

Of course, also the dependence between electric field strength

and electron mobility as it is used in drift diffusion models can be

extracted from the Monte Carlo simulations. These Monte Carlo results

are compared to an analytic mobility model used in device simulations

in

Figure 3.

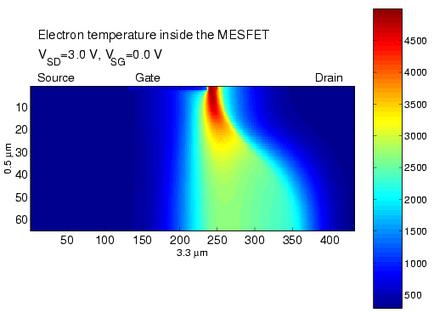

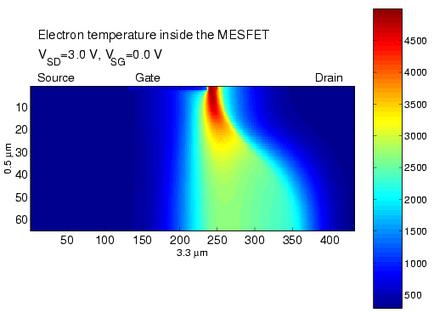

The HDM was applied in one dimension to a silicon

ballistic diode (which models the channel of a MOSFET)

and in two dimensions to a GaAs MESFET structure.

Figure 1.

shows a stationary shock wave inside the ballistic diode.

Such shock waves also appear in small MESFET devices and cause

numerical instabilities, if naive finite difference discretization

is used. Using first and second upwind discretization, such

instabilities can be avoided, although the timesteps that

have to be chosen are rather small (of the order of a femtosecond).

But this will play no special role when full wave propagation inside a

device has to be calculated, because the stability of FDTD

simulations makes even smaller timesteps necessary.

The relatively simple structure of the MESFET was well-suited to

gain experience with two-dimensional hydrodynamic simulations,

since the problem of the heterojunction which is present for the HEMT

can be circumvented. Upwind discretization works also in two dimensions

very well. Stability problems were observed near

the Schottky gate, where the electron density can be extremely small

and the electric field strength very large. But we were able to

stabilize this critical region by limiting the electron drift

velocity to physical values without changing the important

characteristics of the device. See also Figure 2.

Our results were in good agreement with the literature,

although there are not too many data available.

For the one-dimensional calculations we used MATLAB as a comfortable

programming language, but for the computationally expensive calculations

for the 2D HDM we used Fortran 90.

The numerical solution of the

Poisson equation was obtained by a multigrid algorithm, which made

it possible to perform the calculations on our local workstations

at the ETH.

The grid used was homogeneous in x- and y-direction, respectively,

and contained three coarser subgrids. An approximate global solution

of the Poisson equation can be calculated by the standard Gauss-Seidel

method on the coarsest grid, which is then tranferred to the finer grids

and improved by several correction cycles.

S.M. Ghazaly used a SOR method, but on a massively parallel

machine (MasPar MP-2) with an array of 64 times 128 processing elements.

It is known that the multigrid method is the fastest method for solving

the Poisson equation; we also observed that it is not necessary

to solve the Poisson equation at each time step, which made it possible

to speed up the simulation times by a factor of about ten.

It is well possible that multigrid methods will also provide a means

to simulate HEMT devices, where the heterojunction region has to be described

on a much smaller scale than the surrounding layers.

Thus we were able to follow the lines given by our project schedule

for phase 1 of our project. Since the presence of heterojunctions e.g. in a

HEMT poses additional problems from the numerical and physical point

of view, we also concentrated on this subject.

In this case we deviated slightly

from the initial research plan, where we intended to consider heterostructures

in HEMTs in the second year of our project. We performed also one-dimensional

simulations of quantum well structures, based on relatively simple

(thermoionic) physical models for the heterojunction region and the drift

diffusion model for the homogeneous semiconductor material.

This way we gained some experience which

will become important when we turn our attention to

numerical HEMT modelling.

-

During the first half of this century, achievements in Europe dominated

progress in the physics, from the discovery of the electron to the atomic

nucleus and its constituents, from special relativity to quantum mechanics.

Sadly, the conflicts of the 1930s and 40s interrupted this as many scientists

had to leave for calmer shores. The return of peace heralded some decisive

changes. By the early 50s, the Americans had understood that further progress

needed more sophisticated instruments, and that investment in basic science

could drive economic and technological development. While scientists in Europe

still relied on simple equipment based on radioactivity and cosmic rays,

powerful accelerators were being built in the US. Table-top experiments were

being overtaken by projects involving large teams of scientists and engineers.

A few far-sighted physicists, such as Rabi, Amaldi, Auger and de Rougemont,

perceived that co-operation was the only way forward for front-line research in

Europe. Despite fine intellectual traditions and prestigious universities, no

European country could cope alone. The creation of a European Laboratory was

recommended at a UNESCO meeting in Florence in 1950, and less than three years

later a Convention was signed by 12 countries of the Conseil Européen

pour la Recherche Nucléaire. CERN was born, the prototype of a chain of

European institutions in space, astronomy and molecular biology, and Europe was

poised to regain its illustrious place on the scientific map.

CERN exists primarily to provide European physicists with accelerators that meet

research demands at the limits of human knowledge. In the quest for higher

interaction energies, the Laboratory has played a leading role in developing

colliding beam machines. Notable "firsts" were the Intersecting Storage Rings

(ISR) proton-proton collider commissioned in 1971, and the proton-antiproton

collider at the Super Proton Synchrotron (SPS), which came on the air in 1981

and produced the massive W and Z particles two years later, confirming the

unified theory of electromagnetic and weak forces.

The main impetus at present if from the Large Electron-Positron Collider (LEP),

where measurements unsurpassed in quantity and quality are testing our best

description of sub-atomic Nature, the Standard Model, to a fraction of 1% soon

to reach one part in a thousand. By 1996, the LEP energy was doubled to 90 GeV

per beam in LEPII, opening up an important new discovery domain. More high

precision results are expected in abundance throughout the rest of the decade,

which should substantially improve our present understanding. The LEP/LEPII

missions will by then be largely completed.

LEP data are so accurate that they are sensitive to phenomena that occur at

energies beyond those of the machine itself; rather like delicate measurement

of earthquake tremors far from an epicentre. This gives us a "preview" of exciting

discoveries that may be made at higher energies, and allow us to calculate the

parameters of a machine that can make these discoveries. All evidence indicates

that new physics, and answers to some of the most profound questions of our time,

lie at energies around 1 TeV (1 TeV = 1,000 GeV).

To look for this new physics, the next research instrument in Europe's particle

physics armoury is the LHC. In keeping CERN's cost-effective strategy of

building on previous investments, it is designed to share the 27-kilometre LEP

tunnel, and be fed by existing particle sources and pre-accelerators. A

challenging machine, the LHC will use the most advanced superconducting magnet

and accelerator technologies ever employed. LHC experiments are, of course,

being designed to look for theoretically predicted phenomena. However, they

must also be prepared, as far as is possible, for surprises. This will require

great ingenuity on the part of the physicists and engineers.

The LHC is a remarkably versatile accelerator. It can collide proton beams with

energies around 7-on-7 TeV and beam crossing points of unsurpassed brightness,

providing the experiments with high interaction rates. It can also collide

beams of heavy ions such as lead with a total collision energy in excess of

1,250 TeV, about thirty times higher than at the Relativistic Heavy Ion

Collider (RHIC) under construction at the Brookhaven Laboratory in the US.

Joint LHC/LEP operation can supply proton-electron collisions with 1.5 TeV

energy, some five times higher than presently available at HERA in the DESY

laboratory, Germany. The research, technical and educational potential of the

LHC and its experiments is enormous.

Anyhow, at these "incredible" particle energies that are achieved in the

LHC, where the protons have an energy which is 7000 times their rest mass

energy, the Coulomb field of the particles in the accelerator has the shape

of a flat disk for the external observer and it contains photons of high

energy. If the fields of two heavy ions collide, important processes can

take place. It is e.g. possible that a photon-photon fusion creates an

electron-positron pair such that the negatively charged electron is

captured by the positively charged stripped nucleus. This nucleus is

lost for the collider beam, since its charge is now reduced by one

elemental charge and the accelerator fields can no longer hold the particle

on its foreseen trajectory. Pair production with capture therefore restricts

the maximum beam luminosity of the LHC; the described process has been

calculated in

Phys. Rev. A 50, 3980 (1994),

and plays also a role for the production of

antihydrogen. Antihydrogen atoms

are still the most complex form of antimatter which has been produced

artificially up to now.

A further interesting process is the fusion of two photons into

a Higgs particle, the last particle in the Stardard model which has

not been detected yet experimentally (besides the graviton and many other

hypothetic particles, but this is another story).

-

Causal perturbation theory is one more attempt to put the theoretical

setup of (perturbative)

Quantum Field Theory on a sound mathematical basis.

One of the main problems that is solved in the (finite) causal perturbation

theory (fCPT) framework are the ultraviolet and infrared divergences

which show up in the calculation of so-called Feynman diagrams.

Using only well-defined mathematical objects, it is in fact possible to

avoid the problem of divergent quantities in fCPT from the start (if the

theory under consideration is meaningful). Therefore, subsequent

renormalization of divergent expressions is not necessary. It is a very

difficult question to what extent the different renormalization methods

and fCPT are in correspondence. But there are fundamental and conceptual

differences between the methods.

Applying fCPT to gauge theories leads to an important observation. It is

possible to derive the classical properties of QFT's from purely

quantum mechanical input as e.g. Lorentz covariance and unitarity

(Int. Journal Mod. Phys. A, vol. 14, no. 21, 3421 (1999)) .

This is a more satisfactory strategy as it is usually given in

literature, since classical physics should be derived from the

more fundamental quantum principles.

Also the notion of spontaneous symmetry breaking becomes obsolete in fCPT:

The Higgs particle is merely a consequence of the particle masses, and not

vice versa

(Ann. Phys. 8, No. 5, 389 (Leipzig,1999)) .

This does of course not mean that there is no interconnection

between the Higgs particle the the mass generation mechanism.

Some of the people who worked or are still working on causal

perturbation theory and related research areas are:

Henry Epstein, Vladimir Jurko Glaser, Raymond Stora,

Günter Scharf, Dan Radu Grigore, Michael Dütsch, Andreas Aste,

Tobias Hurth, Frank Krahe, Nicola Grillo, Bert Schroer,

Jeferson De Lima Tomazelli, Jose Tadeu Teles Lunardi,

Bruto Max Pimentel, Marc Wellmann, Kostas Skenderis, Urs Walther,

Michel Dubois-Violette, Claude Viallet, L. A. Manzoni,

Florin Constantinescu, Dirk Prange, Markus Gut, Ivo Schorn,

Adrian Müller, Franz-Marc Boas, Gudrun Pinter, Walter F. Wreszinski,

José M. Gracia-Bondia, Serge Lazzarini, Klaus Bresser, Sergio Doplicher,

Klaus Fredenhagen, D. Bahns, Romeo Brunetti, Kostas Skenderis.

Created August 2000 by

Andreas Aste.

Last updated January, 2003.

Back to the personal homepage

Created August 2000 by

Andreas Aste.

Last updated January, 2003.

Back to the personal homepage

My Research Interests

My Research Interests My Research Interests

My Research Interests

Created August 2000 by

Andreas Aste.

Last updated January, 2003.

Back to the personal homepage

Created August 2000 by

Andreas Aste.

Last updated January, 2003.

Back to the personal homepage